Statistics

Pop stats for big geodata

A universal high-performance computing interface allows popular statistical tools to run efficiently on large geospatial datasets.

Even the most computationally demanding statistical analyses on spatial data could run many times faster by using a universal high-performance computing (HPC) interface developed for one of the world’s most widely used statistics tools.

Free open source software is the backbone of research across many scientific disciplines, and the R statistics environment is probably the most popular and well-developed open source tool available to scientists. R does almost everything a researcher might need using everyday computers; its only real limitation is the time it takes to run on very large datasets.

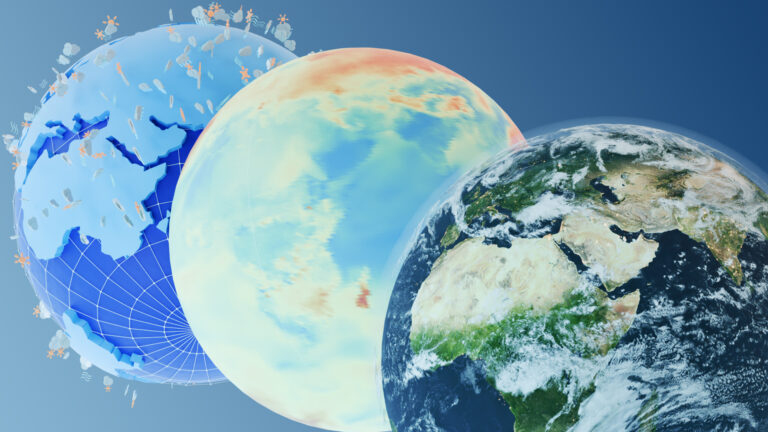

“R is a free programming language for statistical computing and graphics,” says KAUST researcher Sameh Abdulah. “However, existing R packages can really only handle small data sizes because each computation is run sequentially on a single processor—it is simply not feasible to use these packages to analyze large-scale climate and weather data, for example.”

Abdulah and his colleagues—including David Keyes, Marc Genton and Ying Sun from KAUST’s Statistics Program and the Extreme Computing Research Center—have been exploring ways to change this by improving the capabilities of existing statistical packages using the parallel processing power of most desktop computers.

“We want to extend statistical tools like R to large-scale datasets by implementing them using high-performance computing languages,” says Abdulah. “Our goal is for any statistician to be able to run experiments in R with only a very abstract understanding of the high-performance hardware like graphics processors and distributed memory systems that they may be using.”

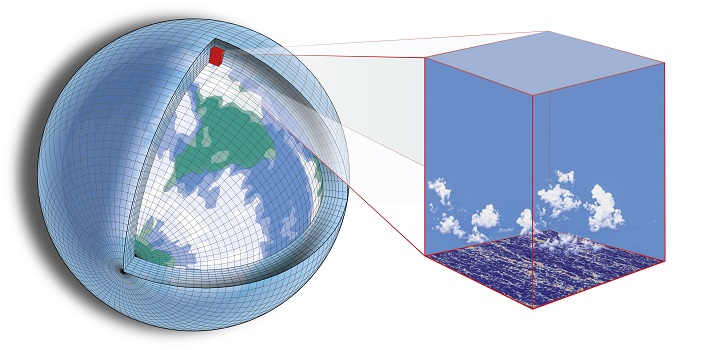

The ExaGeoStatR package developed by Abdulah and the team uses the existing parallel linear algebra libraries in R and a high-level representation of the underlying computing hardware to perform large-scale spatial modeling and prediction tasks using multiple processes simultaneously, which speeds up the statistical processing significantly.

Testing the package on large-scale spatial climate and weather datasets with up to 250,000 observations on a range of computing architectures, the researchers achieved computational speeds up to 33 times faster than existing spatial statistics packages.

“Our experiments using spatial data, comprising weather measurements taken at many irregular locations, also showed that ExaGeoStatR is able to model the data accurately and predict missing measurements at unobserved locations,” says Abdulah. “With the existence of parallel hardware architectures on most of today’s personal computers, whether it’s a multicore processor or graphics processor, our package allows almost any statistician to easily tackle large-scale spatial problems.”

References

- Abdulah, S., Li, Y., Cao, J., Ltaief, H., Keyes, D., Genton, M. & Sun, Y. ExaGeoStatR: Harnessing HPC capabilities for large scale geospatial modeling using R. The International Conference for High Performance Computing, Networking, Storage and Analysis Denver, Colorado 17-22 November 2019.| article

- Schneider, T., Teixeira, J., Bretherton, C. et al. Climate goals and computing the future of clouds. Nature Climate Change 7, 3–5 (2017).| article

You might also like

Statistics

Checking your assumptions

Statistics

Internet searches offer early warnings of disease outbreaks

Statistics

Joining the dots for better health surveillance

Statistics

Easing the generation and storage of climate data

Statistics

A high-resolution boost for global climate modeling

Applied Mathematics and Computational Sciences

Finer forecasting to improve public health planning

Bioengineering

Shuffling the deck for privacy

Bioengineering