Computer Science

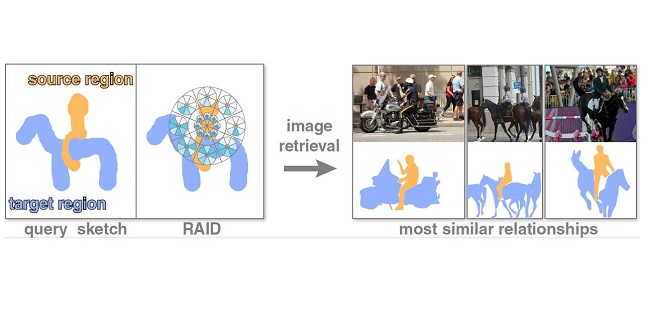

Technology search for relationships

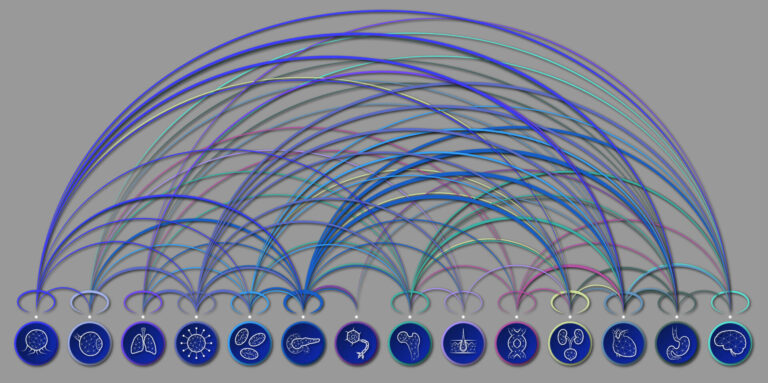

A sketch-based query for searching for relationships among objects in images could enhance the power and utility of image search tools.

Therelation-augmented image descriptor (RAID) allows searching of image databases based on the relationship between objects in a sketch or written query.

Reproduced with permission from ref 1© 2016 Association for Computing Machinery

Searching for specific images may become easier thanks to a new tool that generates image queries based on a sketch or description of objects in spatial relationships1. The tool, which has been proposed by researchers from KAUST and University College London, makes it easier to search the world’s ever-expanding databases for pictures matching a wider and more powerful range of image queries.

The enormous collections of photographs and pictures now available in online databases represent a remarkable resource for research and creative arts. As unfathomably rich as these databases might be, they are only as useful as a user’s capacity to use a query to search effectively.

“When searching for images in a database like Flickr, the images need to include a short but informative description,” explained Peter Wonka, the KAUST researcher who led the study. “The description needs to be short to allow the search algorithm to match against millions of possibilities, but also needs to be informative because the correct images need to be found based solely on this description.”

Wonka and his colleagues Paul Guerrero and Niloy Mitra from University College London wanted to add something more powerful to the currently limited repertoire of image search tools without adding extra metadata to existing images.

“Instead of describing just the individual objects occurring in an image, we wanted to describe the relationships between objects—such as ‘riding,’ ‘carrying,’ ‘holding’ or ‘standing on’—in a way that can be computed and searched for efficiently,” noted Wonka.

The team came up with a query tool they call a relation-augmented image descriptor (RAID) that takes either a written description or sketch of objects in a specific spatial relationship and searches for matches in the image database based on relatively simple geometric processing.

“RAID allows us to search using a sentence such as ‘person standing on snowboard’ or to use a simple sketch of the desired composition of objects or an example image with the desired object composition,” said Wonka. “Our scheme uses a novel description based on the spatial distribution of simple relationships — like ‘above’ or ‘left of’ — over the entire object, which allows us to successfully discriminate between different complex relationships.”

RAID provides a new way to describe images and has potential applications in computer graphics, computer vision and automated object classification. The team is currently working on a three-dimensional version of the descriptor that could help with computer interpretation of entire scenes.

References

-

Guerrero, P., Mitra, N.J. & Wonka, P. RAID: A relation-augmented image descriptor. ACM Transaction Graphics, 35, 1-12 (2015).| article

You might also like

Computer Science

Green quantum computing takes to the skies

Computer Science

Probing the internet’s hidden middleboxes

Bioscience

AI speeds up human embryo model research

Computer Science

Improving chip design on every level

Computer Science

Sweat-sniffing sensor could make workouts smarter

Computer Science

A blindfold approach improves machine learning privacy

Computer Science

AI tool maps hidden links between diseases

Bioscience