Statistics

Going to extremes to predict natural disasters

A systematic approach to selecting and configuring statistical models improves predictions of extreme events.

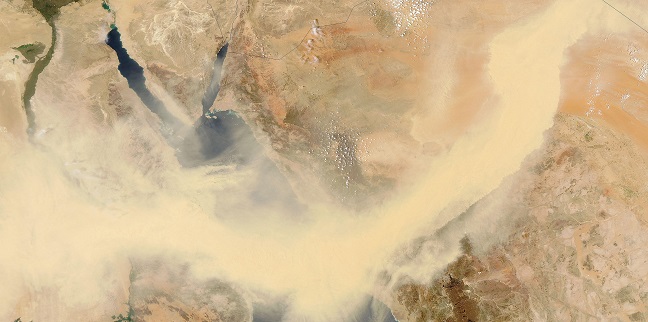

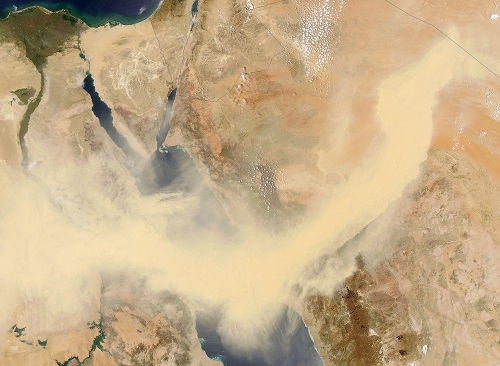

A Red Sea sandstorm in 2005. Better prediction of extreme events, like natural disasters, such as sandstorms, could help mitigate and plan for their impact.

© Science History Images / Alamy Stock Photo

Predicting natural disasters remains one of the most challenging problems in simulation science because not only are they rare but also because only few of the millions of entries in datasets relate to extreme events. A systematic method for comparing the accuracy of different types of simulation models for such prediction problems has recently been developed by a working group at KAUST.

“Extreme events like dust storms, droughts and floods can affect communities and damage infrastructure,” says Sabrina Vettori, a doctoral student cosupervised by Marc Genton and Raphaël Huser. “Modeling and forecasting extremes is very challenging and requires flexible, yet interpretable, models with sound theoretical underpinning—criteria that are exponentially more difficult to meet as the data dimensionality increases,” she explains.

Increasing the dimensionality or number of observation variables (like temperature and wind speed) dramatically increases the predictive power of a simulation model, but the statistical dexterity needed to correctly pick out and predict the combination of conditions leading to extreme events is immense.

“We are exploring the boundaries of extreme value theory,” says Genton. “The aim of our work is to provide a greater understanding of the performance of existing estimators for modeling extreme events over multiple variables and to develop a new statistical method for nonparametric estimation in higher dimensions.”

Multivariate simulations generally follow one of two approaches. The first are parametric approaches that configure the model by using a set of variables to best approximate the behavior described by the data. The second are nonparametric approaches, which are statistical methods that fit a function to data but use no underlying assumptions or constraints.

Both approaches have pros and cons, and the best method depends on the application,” says Huser.

“Nonparametric methods are typically more flexible than parametric methods, making them less prone to bias, but they are usually limited to small dimensions,” explains Huser. “Parametric methods can be applied to much higher-dimensional problems, such as spatial applications with data recorded at a large number of monitoring sites, but are sensitive to errors in the underlying parameters and assumptions.

In their research, the team developed a computational tool to implement nonparametric methods and conducted a vast and systematic simulation to compare nonparametric and parametric estimator performance in up to five dimensions under various scenarios. These methods provided significant insight into higher dimensional settings.

“These estimators can be used to better model the location and magnitude of extreme events and to assist in risk assessment and the identification of trends and variability estimates,” says Vettori.

References

- Vettori, S., Huser, R. & Genton, M. G. A comparison of dependence function estimators in multivariate extremes. Statistics and Computing, advance online publication, 11 May 2017.| article

You might also like

Statistics

Checking your assumptions

Statistics

Internet searches offer early warnings of disease outbreaks

Statistics

Joining the dots for better health surveillance

Statistics

Easing the generation and storage of climate data

Statistics

A high-resolution boost for global climate modeling

Applied Mathematics and Computational Sciences

Finer forecasting to improve public health planning

Bioengineering

Shuffling the deck for privacy

Bioengineering