Computer Science

A blindfold approach improves machine learning privacy

A query-based method for extracting knowledge from sensitive datasets without showing any underlying private data could resolve long-standing privacy concerns in machine learning.

A key challenge lies in balancing patient privacy with the opportunity to improve future outcomes when training artificial intelligence (AI) models for applications such as medical diagnosis and treatment. A KAUST-led research team has now developed a machine-learning approach that allows relevant knowledge about a patient’s unique genetic, disease and treatment profile to be passed between AI models without transferring any original data[1].

“When it comes to machine learning, more data generally improves model quality,” says Norah Alballa, a computer scientist from KAUST. “However, much data is private and hard to share due to legal and privacy concerns. Collaborative learning is an approach that aims to train models without sharing private training data for enhanced privacy and scalability. Still, existing methods often fail in heterogeneous data environments when local data representation is insufficient.”

Learning from sensitive data while preserving privacy is a long-standing problem in AI: it restricts access to large data sets, such as clinical records, that could greatly accelerate research and the effectiveness of personalized medicine.

One way privacy can be maintained in machine learning is to break up the dataset and train AI models on individual subsets. The trained model can then share just the learnings from the underlying data without breaching privacy.

This approach, known as federated learning, can work well when the datasets are largely similar, but in situations when distinctly different datasets form part of the training library, there can be a breakdown in machine-learning process.

“These approaches can fail because, in a heterogenous data environment, a local client can ‘forget’ existing knowledge when new updates interfere with previously learned information,” says Alballa. “In some cases, introducing new tasks or classes from other datasets can lead to catastrophic forgetting, causing old knowledge to be overwritten or diluted.”

Alballa, working with principal investigator Marco Canini in the SANDS computing lab, addressed this problem by modifying an existing approach called knowledge distillation (KD) with data-free relevance estimation. The latter was designed to improve the relevance of retrieved learnings, a masking process using synthetic data to filter out irrelevant knowledge, and a two-phase training strategy that integrates new knowledge without disrupting existing knowledge to avoid the risk of catastrophic forgetting.

“Mitigating knowledge interference and catastrophic forgetting was our main challenge in developing our query-based knowledge transfer approach,” says Alballa. “QKT outperforms methods like naive KD, federated learning, and ensemble approaches by a significant margin – more than 20 percentage points in single-class queries. It also eliminates communication overhead by operating in a single round, unlike federated learning, which requires multiple communication steps.”

QKT has broad applications in medical diagnosis. It could enable, for example, one hospital’s AI model to learn to detect a rare disease from another hospital’s model without sharing sensitive patient data.

It also has applications in other systems where models must adapt to new knowledge while preserving privacy, such as fraud detection and intelligent Internet-of-Things systems.

“By balancing learning efficiency, knowledge customization, and privacy preservation, QKT represents a step forward in decentralized and collaborative machine learning,” Alballa says.

Reference

- Alballa, N., Zhang, W., Liu, Z., Abdelmoniem, A.M., Elhoseiny, M., & Canini, M. Query-based knowledge transfer for heterogeneous learning environments. Thirteenth International Conference on Learning Representations (2025).| article.

You might also like

Computer Science

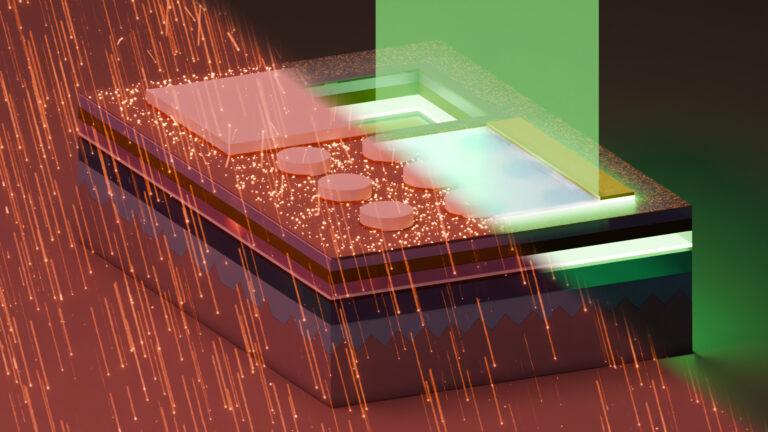

Green quantum computing takes to the skies

Computer Science

Probing the internet’s hidden middleboxes

Bioscience

AI speeds up human embryo model research

Computer Science

Improving chip design on every level

Computer Science

Sweat-sniffing sensor could make workouts smarter

Computer Science

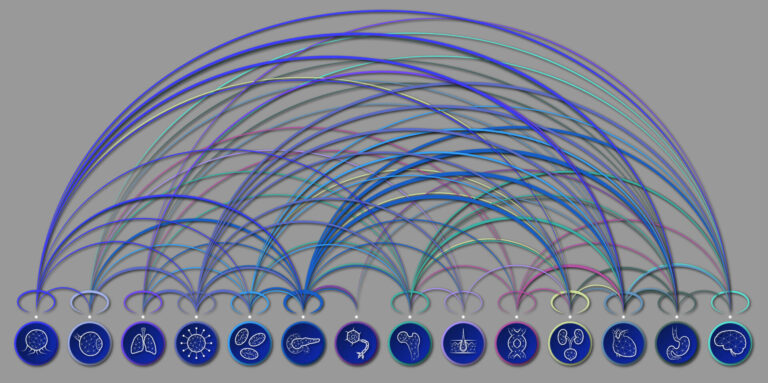

AI tool maps hidden links between diseases

Bioscience

The theory of everything that wasn’t

Computer Science