Bioscience | Computer Science

The theory of everything that wasn’t

A much-hyped new theory that purports to provide a universal explanation of natural phenomena merely repackages existing theoretical tools.

A peer-reviewed mathematical proof published by a KAUST co-led international research team in PLOS[1] has shown that the recently published Assembly Theory (AT) approximates existing theories of algorithmic complexity and information compression. The findings cast doubt on some of the new theory’s claims to explain a range of natural phenomena from biology and evolution to physics, the Universe, and even the search for extraterrestrial life.

“We were motivated to study AT because of its broad claims and widespread misconceptions regarding information compression, computability and algorithmic complexity, which have been the focus of our group’s research for over a decade,” says Jesper Tegner from KAUST’s Living Systems Lab, who, along with Hector Zenil of King’s College London, led the research team. “AT’s attraction lies in its interdisciplinary appeal, offering the idea of a unified framework that could potentially quantify complexity across various domains, in particularly selection and evolution, and solve longstanding problems. So, we set out to rigorously scrutinize AT’s claims, particularly regarding its novelty.”

The idea behind AT is to measure the complexity of molecular structures based on the number of steps required to assemble them from basic building blocks, calculated through an “assembly index.” The researchers observed that this is very similar to a popular statistical compression method called Lempel-Ziv (LZ) compression, like that used for ZIP and PNG files, which identify patterns in data and compress the file size by replacing repetitive elements with shorter representations.

“This applies not just to digital data; it is effectively the same as counting how many unique blocks are needed to recreate any original object, as our own measures have always done,” says Tegner. “Our collaboration, which includes experts in cell and molecular biology, complexity science and information theory, had previously shown the strong connections between compression and selection and evolution. What the assembly index does is in fact already done better by an index introduced by our collaboration over a decade ago called the Block Decomposition Method, so it is important for us to address these misunderstandings for the scientific community.”

Through their mathematical proof, the research team demonstrated the direct relationship between AT’s assembly index and LZ compression, as well as to a measure of uncertainty in a system known as Shannon entropy.

“A well-shuffled deck of cards for example would have a high Shannon entropy, while a perfectly ordered deck would have a low entropy,” says Zenil. “Assembly theory uses the same principle, but does so with many extra steps.”

The mathematical proof showed the step-by-step equivalence between AT and existing methods based on information theory and algorithmic complexity, confirming that existing methods can achieve similar results without the need for AT’s additional conceptual layers.

“AT garnered attention in the popular media despite its unresolved theoretical challenges,” Tegner says. “This speaks to the power of framing and the appeal of interdisciplinary ideas, even if not fully substantiated. It is a cautionary tale, a reminder to maintain a critical perspective, particularly regarding claims of universal solutions to complex scientific problems.”

Reference

- Abrahão F.S., Hernández-Orozco S, Kiani N.A., Tegnér J. & Zenil H. Assembly Theory is an approximation to algorithmic complexity based on LZ compression that does not explain selection or evolution. PLOS Complex Systems 1, e0000014 (2024).| article

You might also like

Bioscience

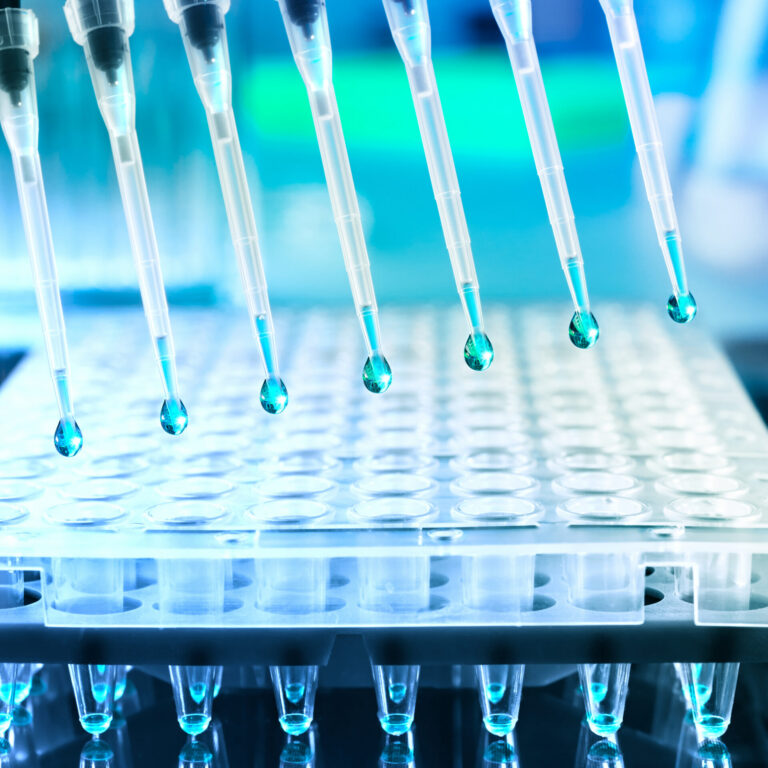

Robust workflow built for chemical genomic screening

Bioscience

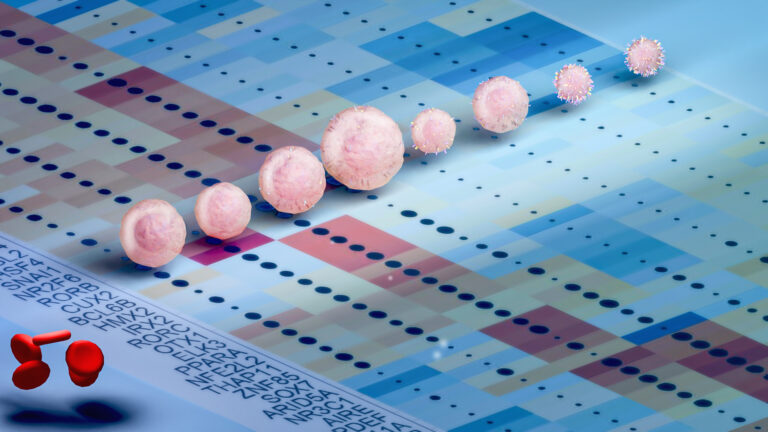

Cell atlas offers clues to how childhood leukemia takes hold

Bioscience

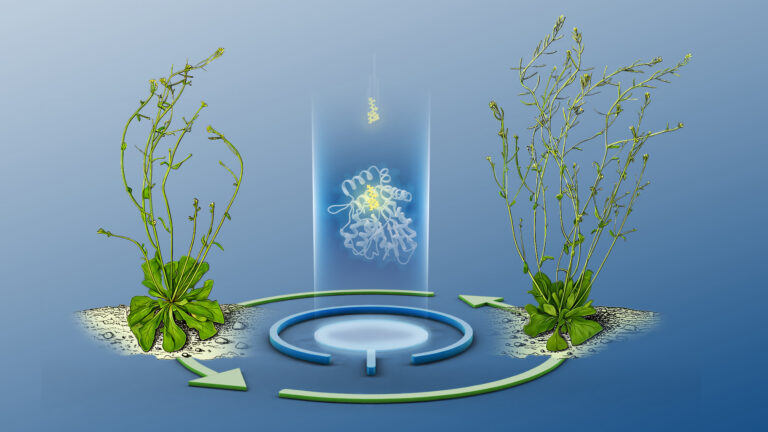

Hidden flexibility in plant communication revealed

Bioscience

Harnessing the unintended epigenetic side effects of genome editing

Computer Science

Green quantum computing takes to the skies

Computer Science

Probing the internet’s hidden middleboxes

Bioscience

Mica enables simpler, sharper, and deeper single-particle tracking

Bioengineering