Bioengineering | Electrical Engineering

Giving voice to the voiceless through assistive technology

Artificial skin coupled with sensors and machine learning technology allows people with voice disorders to communicate using soundless mouth movements.

More than 18 million people in the United States alone have problems creating or forming speech sounds. To address such communication challenges, researchers at KAUST have developed an assistive magnetic skin system for speech reconstruction, known as AM2S-SR.[1]

“Most individuals with speech impairments rely on sign language, which very few people understand. Our technology enables a more natural way to communicate, using only their mouth movements, whenever they want and wherever they are,” says Montserrat Ramirez De Angel, a bioengineer working towards a Ph.D. under Khaled Salama’s supervision.

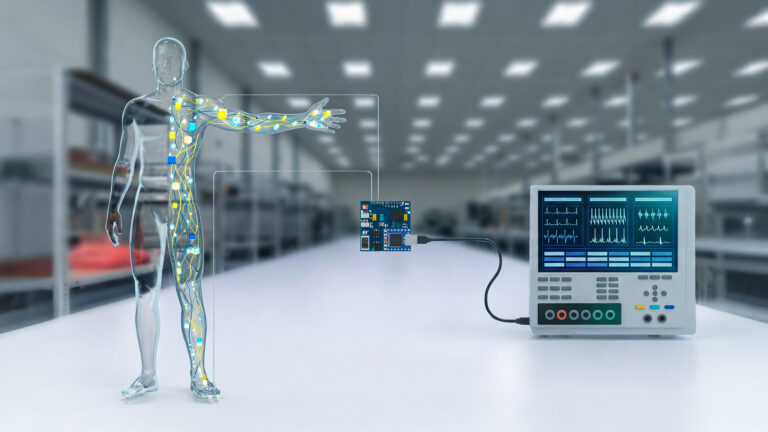

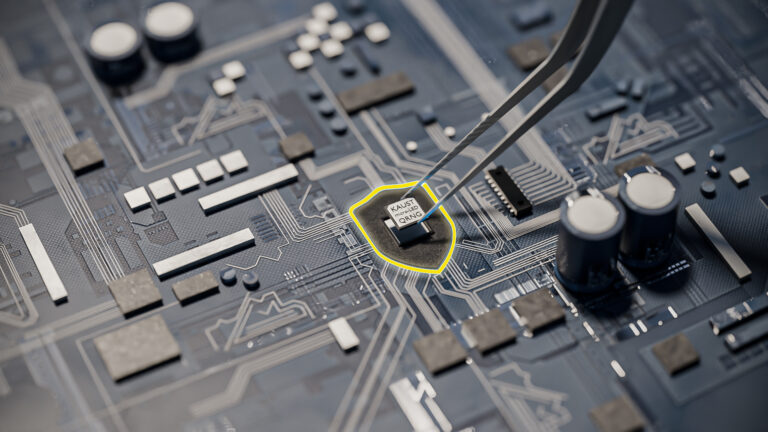

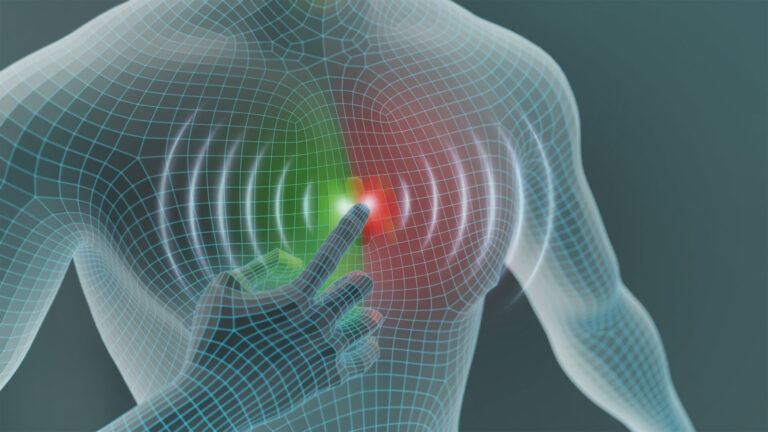

The system combines wearable magnetic skins and machine learning to track mouth movements for speech reconstruction. The first-of-its-kind artificial skin developed by Abdullah Almansouri in Salama’s laboratory is safe and comfortable to wear, can be modified into any shape and size, and does not require a wired connection to other devices. It contains magnetized microparticles that, when coupled with magnetic sensors, can measure vibration, touch and movement.

Previous work has demonstrated how the magnetic skin can be used to track facial expressions in people with quadriplegia and help them control wheelchair movements. The skin can also track eye movements, which could be used to analyze sleep patterns or interact with machines without making contact.

“When we tested magnetic skin samples around the mouth, we realized it could accurately ‘read’ the movements involved in producing speech. We then embarked on this project to help people with voice disorders to communicate more easily,” Ramirez De Angel explains.

AM2S-SR has been designed to help people with voice disorders caused by physical or functional damage that affects the movement of air from the lungs to vocal cords, throat, nose, mouth or lips.

The system involves two magnetic skin patches that are attached near the bottom lip. As the user talks, the artificial skin moves, causing changes in the magnetic field surrounding the mouth. These changes are detected by sensors — two magnetphones placed at each side of the face — that stream the data to a head-unit where it is processed and analyzed using a machine learning algorithm, which can predict the intended words and letters. The predicted words are then exported to a display or speakers.

“Because mouth movements are so complex and differ between individuals, we had to collect a lot of data to train the system, but as a result, it is very robust,“ she says. Remarkably, AM2S-SR achieved a 94.96% success rate in identifying all the letters in the English alphabet.

Ramirez De Angel received an award for the live demonstration of AM2S-SR at the IEEE Biomedical Circuits and Systems Conference 2023 and will soon be embarking on tests in patients. “I will begin a collaboration with a rehabilitation center in Italy, where we will train the system to accommodate specific speech-sound diseases, such as apraxia or asymmetric mouth movement coordination.”

Reference

- Ramirez-De Angel, M., Almansouri, A.S. & Salama, K. N. Assistive magnetic skin system for speech reconstruction: an empowering technology for aphonic individuals. Advanced Intelligent Systems 6, 2300452 (2023).| article

You might also like

Bioengineering

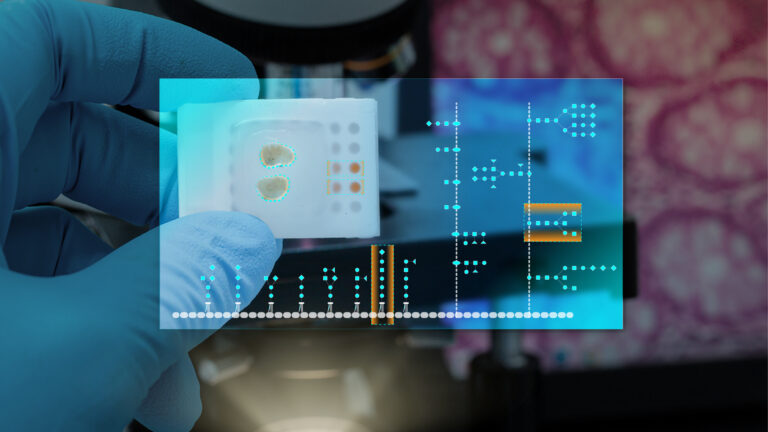

Self-aware biosensors boost digital health monitoring

Bioengineering

Algae and the chocolate factory

Bioengineering

Smart patch detects allergies before symptoms strike

Computer Science

Green quantum computing takes to the skies

Bioengineering

Cancer’s hidden sugar code opens diagnostic opportunities

Electrical Engineering

Micro-LEDs boost random number generation

Bioengineering

Promising patch for blood pressure monitoring

Bioengineering