Computer Science

A machine that learns to learn

A neural network that can learn its own learning algorithm opens the door to self-improving artificial intelligence.

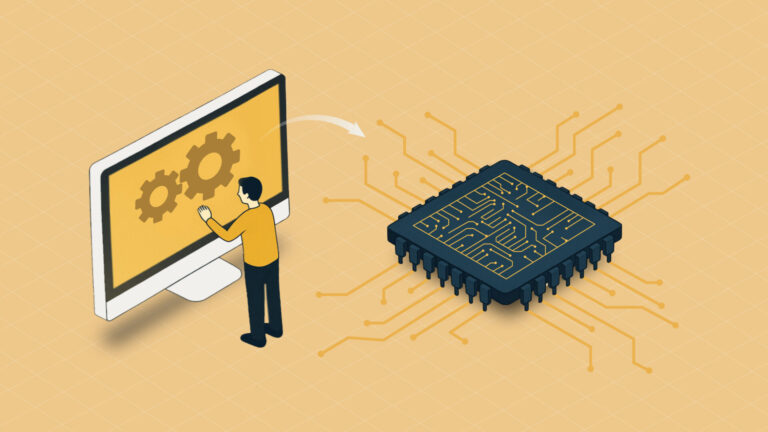

By replacing parts of the conventional nodes in a neural network (NN) with tiny NNs, a research team from KAUST and the Swiss Artificial Intelligence Lab IDSIA have devised a general self-improving artificial intelligence (AI) model[1]. The proof-of-concept study could enable a new generation of AI that can “learn to learn” without human programming.

Today’s most popular AI models are artificial neural networks (NN) — interconnected networks of programmable nodes having weighted connections that are incrementally adjusted in response to labelled training data. The program that is used to change the weights is called a learning algorithm (LA), which is preconfigured by the human developer.

The most popular LA is backpropagation, by which the NN “learns” by adjusting the weights to give the correct answers to a training input. However, current LAs such as backpropagation are limited to what can be invented by humans and may be suboptimal.

“Since the 1970s, my main goal has been to build a self-improving AI that learns to become much smarter than myself,” says Juergen Schmidhuber, who has been referred to as the father of modern AI and is the director of KAUST’s Artificial Intelligence Initiative. “In this work, we created a method that ‘meta learns’ general purpose LAs that start to rival the old human-designed backpropagation LA.”

Ph.D. student Louis Kirsch and Schmidhuber replaced the weights of the nodes with small NNs tasked with discovering good weight change algorithms on their own — an elegantly simple modification with huge implications.

“In our proposed method, called Variable Shared Meta Learning or VSML, the NN weights are not directly updated by a human-invented LA to improve classification; instead, the NN ‘teaches itself’ to get better. It no longer uses backpropagation, but instead discovers novel ways of learning that differ from what humans have invented,” says Schmidhuber.

Previous meta-learning methods were often limited to narrow domains of similar problems. Importantly, VSML allows for the discovery of novel general-learning algorithms that can learn to solve problems in problem classes that AI has not encountered before.

Kirsch and Schmidhuber reported a range of experiments using VSML, demonstrating its learning speed, adaptability and its discovery of a problem-specific improvement of backpropagation.

“The most widely used machine-learning algorithms were invented and hardwired by humans,” says Schmidhuber. “Can we also construct meta-learning algorithms that learn better LAs in order to build truly self-improving AI without any limits other than the limits of computability and physics?”

Schmidhuber’s work is a step in this direction.

Reference

- Kirsch, L & Schmidhuber, J. Meta learning backpropagation and improving It. Advances in Neural Information Processing Systems 34, 14122-14134 (2021).| article

You might also like

Computer Science

Green quantum computing takes to the skies

Computer Science

Probing the internet’s hidden middleboxes

Bioscience

AI speeds up human embryo model research

Computer Science

Improving chip design on every level

Computer Science

Sweat-sniffing sensor could make workouts smarter

Computer Science

A blindfold approach improves machine learning privacy

Computer Science

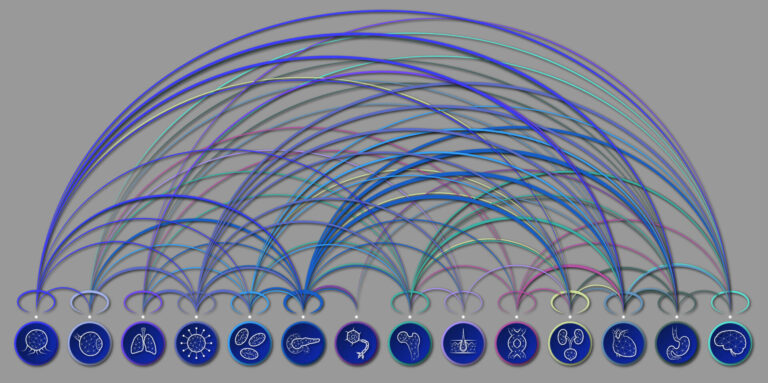

AI tool maps hidden links between diseases

Bioscience