Applied Mathematics and Computational Sciences | Statistics

Neural networks give deeper insights

A "deep" many-layered neural network does the heavy lifting in calculating accurate predictions from large complex environmental datasets.

A statistical approach utilizing the learning capability of neural networks can efficiently solve even the most complex and large spatiotemporal sets, KAUST researchers have shown.[1] The method, called space-time DeepKriging, opens the way for faster and more reliable prediction of environmental processes.

“The collection of time-series data from a large number of spatial locations is now common in many research fields,” says Pratik Nag from the research team. “However, conventional statistical analyses of such spatiotemporal data generally rely on traditional Gaussian process models and they assume that the relationships in the data are stationary over time, which is often not the case.”

Traditional statistical analyses of spatiotemporal data require some early assumptions to be made on the underlying relationships in the data. Heavy computations are then undertaken to fit the model to the data using a likelihood-based approach. This generally requires assuming that every point in the dataset follows a “Gaussian“ or bell-curve distribution and is related to every other point in a smooth and predictable way. For large and complex datasets with unknown spatiotemporal relationships, however, this can lead to poor predictions.

“We developed a flexible approach that is suitable for non-Gaussian and nonstationary behaviors by using a deep neural network structure,” Nag explains.

Nag, in collaboration with KAUST’s Ying Sun and Brian Rich from North Carolina State University, instead embedded some highly flexible models within a many layered deep neural network (DNN). This allowed the DNN to “learn“ the intrinsic and intricate relationships in the data, and to do so without any heavy number crunching.

“Our approach operates without relying on likelihood estimation, which eliminates the need for intensive matrix calculations and significantly reduces the computational burden,” says Nag.

To develop their method, the research team needed to bridge deep learning algorithms and statistical models, which required the mathematical transformation of spatiotemporal prediction and forecasting problems into regression problems that can be used in DNNs.

“Our approach also goes beyond conventional machine learning methods by incorporating deep quantile regression frameworks to quantify uncertainty. This is very important to inform decision-making and research and will help improve our understanding of complex phenomena,” Nag says.

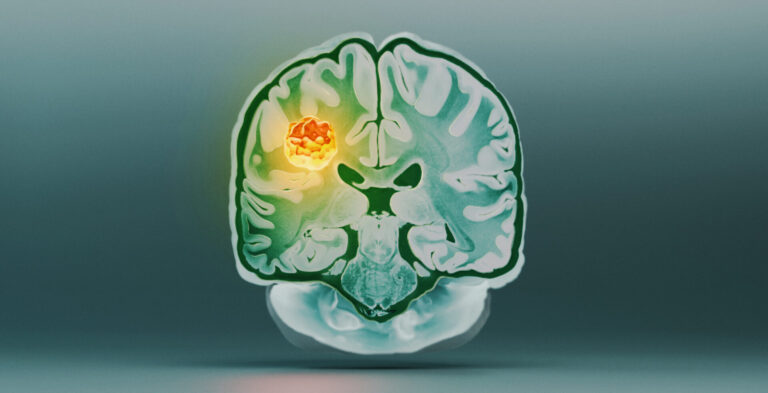

The team applied the approach to air quality forecasting across the entire United States for a select period of 2023. The model was able to run far quicker than conventional methods. But also, it resolved important features of the data, such as significant elevations of airborne particulates in urban centers, without requiring the input of any user assumptions.

“Our model will be useful for forecasting complex spatiotemporal processes and is highly scalable to handle very large datasets efficiently with minimal computational resources,” says Nag. “We are now exploring other applications of this method and even better ways of quantifying uncertainties.”

Reference

- Nag, P., Sun, Y. & Reich, B.J. Spatio-temporal DeepKriging for interpolation and probabilistic forecasting. Spatial Statistics 57, 100773 (2023).| article.

You might also like

Applied Mathematics and Computational Sciences

Smarter MRI image analysis for the whole heart

Applied Mathematics and Computational Sciences

Realistic scenario planning for solar power

Statistics

Checking your assumptions

Statistics

Internet searches offer early warnings of disease outbreaks

Statistics

Joining the dots for better health surveillance

Statistics

Easing the generation and storage of climate data

Applied Mathematics and Computational Sciences

Bringing an old proof to modern problems

Applied Mathematics and Computational Sciences