Electrical Engineering

Visual computing hits a moving target

A surprisingly simple algorithm helps computers perceive individual objects inside videos from their movement patterns.

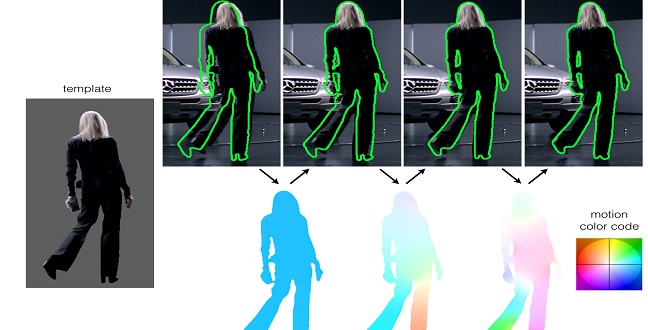

A new video analysis tool can automatically isolate objects from their background by tracking how shapes move, deform and come into view.

© 2015 KAUST

Computer programs that automatically spot objects and boundaries in videos are fundamental to today’s film industry as well as future robotic technology. Taking a cue from nature, researchers from KAUST have developed a way to use the distinctive motion patterns of an object to identify and track its shape1.

Most object-detecting algorithms analyze signals such as color and texture to define an object from its background. When the image information becomes too complicated, however, the elementary statistics used for segmentation begin to break down and cause detection errors. Current programs have to be ‘trained’ with large data simulations run from different viewpoints and illumination conditions to locate objects with sufficient certainty.

Ganesh Sundaramoorthi and Yanchao Yang, from KAUST’s Computer, Electrical and Mathematical Science and Engineering Division, opted to tackle this problem with a dynamic model based on object movement. Just as humans can better recognize a moving animal than a static, camouflaged one, the researchers theorized that comparing how certain shapes move, deform, or depart from view as the video plays could effectively segment objects from their backgrounds. This would be possible even in complex conditions.

Computing shapes from movement pattern data, however, can sometimes be ambiguous. Sundaramoorthi uses the example of how the rotating stripes on a traditional barbershop pole appear to move simultaneously up and down. Adding to this uncertainty is that a number of different factors and noise patterns encompass object motion in videos, making them hard to isolate with algorithms.

The KAUST team designed an algorithm that calculates how shapes in an image deform to match the next frame, and checks for occlusions — the appearance or disappearance of objects from view. They found that these two factors were key to recovering movement.

But solving such equations can involve heavy computational resources. So the researchers designed a geometry-based framework that codes how the shapes deform into a compact mathematical metric. “Surprisingly, this leads to a simple and efficient computational algorithm that eliminates the need for sensitive tuning parameters,” says Sundaramoorthi.

Trials on videos revealed that the new algorithm results in a template that warps around objects and finds their shapes far more effectively than contemporary programs. “Even though the motions uncovered by our algorithm may not coincide exactly with reality, they are sufficient to segment objects accurately,” notes Sundaramoorthi. “This takes us one step closer to automating processes in robotic control and high quality three-dimensional videos.”

References

- Yang, Y. & Sundaramoorthi, G. Shape tracking with occlusions via coarse-to-fine region-based Sobolev descent. IEEE Transactions on Pattern Analysis and Machine Intelligence 37, 1053–1066 (2015).| article

You might also like

Bioengineering

Self-aware biosensors boost digital health monitoring

Bioengineering

Smart patch detects allergies before symptoms strike

Computer Science

Green quantum computing takes to the skies

Electrical Engineering

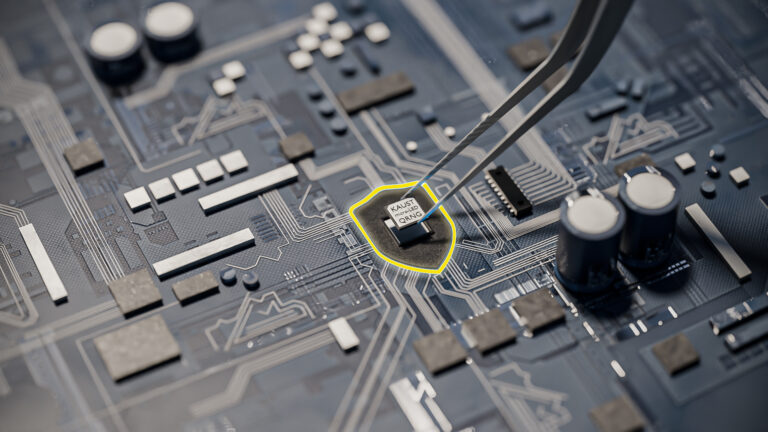

Micro-LEDs boost random number generation

Bioengineering

Sensing stress to keep plants safe

Computer Science

Sweat-sniffing sensor could make workouts smarter

Electrical Engineering

New tech detects dehydration by touching a screen

Electrical Engineering